Facial Expression Recognition with Landmark Detection

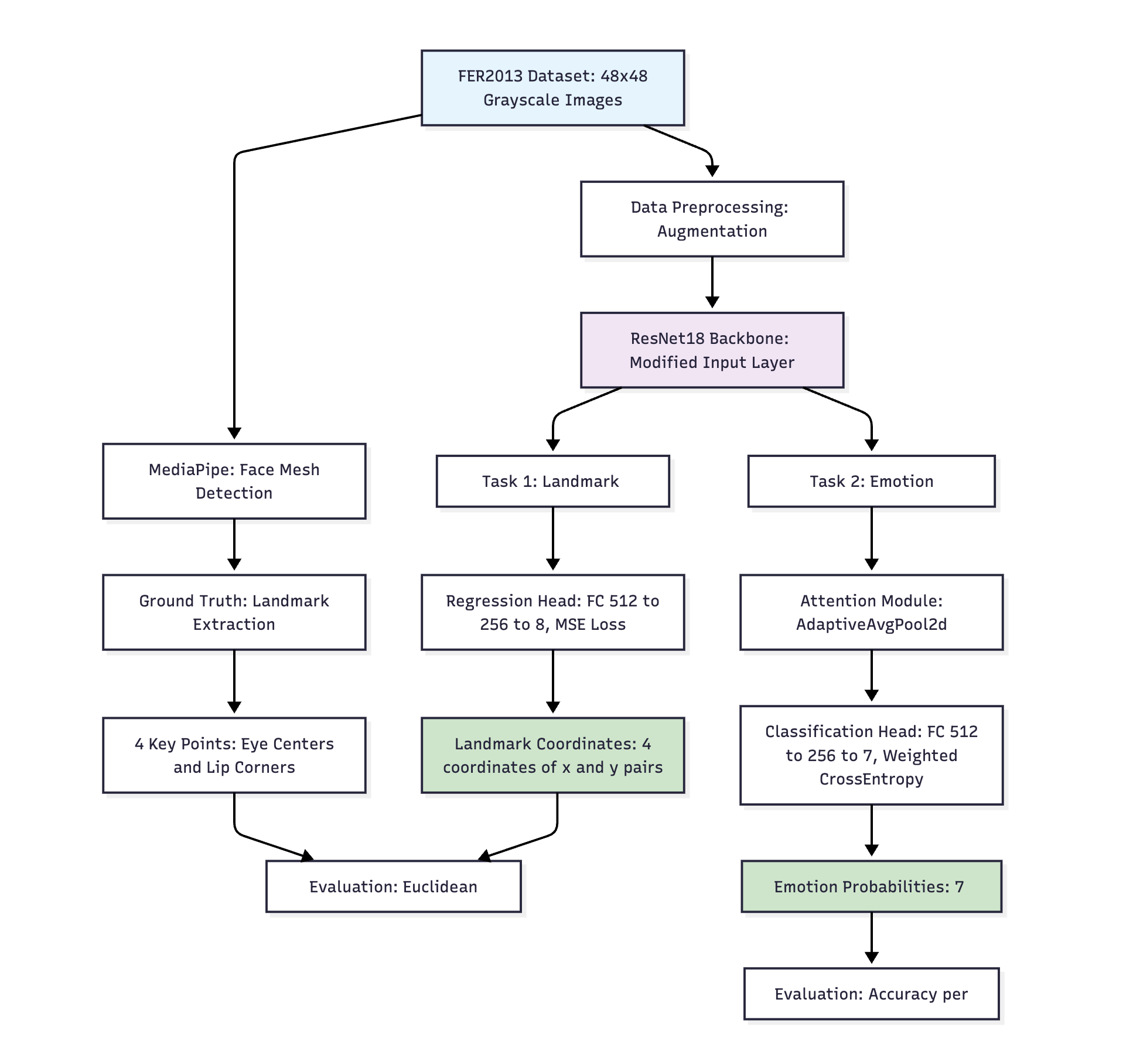

A two-stage facial expression recognition pipeline using MediaPipe for landmark detection and a modified ResNet18 with self-attention for emotion classification.

Overview

This project develops a two-stage facial expression recognition pipeline on the FER2013 dataset:

- Facial landmark detection using MediaPipe to extract key points

- Emotion classification with a modified ResNet18 enhanced by self-attention.

Implemented in PyTorch, the system addresses class imbalance and low-resolution challenges, achieving 52.38% accuracy on imbalanced dataset with a 4.2% improvement from attention mechanisms.

Dataset

- FER2013 consists of 28,709 grayscale 48x48 pixel images across 7 emotion classes (Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral), split into training (28,709), public test, and private test sets.

- The dataset presents challenges like class imbalance (e.g., Disgust: only 547 samples) and detection failures in ~18% of low-resolution images.

For more details, refer to the FER2013.

Core Challenge: Handling noisy, imbalanced facial data for accurate landmark extraction and emotion prediction.

Methodology

Data Preprocessing and Augmentation

Note: Images are normalized and augmented to improve model robustness using augmentation techniques like rotation, zoom, and horizontal flip.

Part 1: Facial Landmark Detection

MediaPipe extracts ground-truth landmarks, which train a ResNet18 regressor for 4 key points (eye centers, lip corners).

class LandmarkDetectionModel(nn.Module):

def init(self, num_landmarks=8): # 4 points x (x,y)

super().init()

self.resnet = models.resnet18(weights=models.ResNet18_Weights.DEFAULT)

self.resnet.fc = nn.Sequential(

nn.Linear(self.resnet.fc.in_features, 256),

nn.ReLU(),

nn.Dropout(0.3),

nn.Linear(256, num_landmarks)

)

def forward(self, x):

return self.resnet(x)

Part 2: Emotion Classification with Attention

The ResNet18 model is modified to include a self-attention mechanism, enhancing feature extraction and classification.

class EmotionClassificationModel(nn.Module):

def __init__(self, num_classes=7):

super(EmotionClassificationModel, self).__init__()

# Load pre-trained ResNet18

self.resnet = models.resnet18(weights=models.ResNet18_Weights.DEFAULT)

# Modify first conv layer to handle grayscale images while utilizing pre-trained weights

original_weight = self.resnet.conv1.weight.clone()

self.resnet.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

with torch.no_grad():

self.resnet.conv1.weight = nn.Parameter(original_weight)

# Modify the final fully connected layer for emotion classification

num_features = self.resnet.fc.in_features

self.resnet.fc = nn.Sequential(

nn.Dropout(0.3), # Increased dropout from 0.2 to 0.3

nn.Linear(num_features, 256),

nn.ReLU(),

nn.Dropout(0.3), # Uncommented the second dropout layer

nn.Linear(256, num_classes)

)

self.attention = nn.Sequential(

nn.AdaptiveAvgPool2d(1), # Global average pooling

nn.Conv2d(512, 32, kernel_size=1),

nn.ReLU(),

nn.Conv2d(32, 512, kernel_size=1),

nn.Sigmoid()

)

def forward(self, x):

# Extract intermediate features

x = self.resnet.conv1(x)

x = self.resnet.bn1(x)

x = self.resnet.relu(x)

x = self.resnet.maxpool(x)

x = self.resnet.layer1(x)

x = self.resnet.layer2(x)

x = self.resnet.layer3(x)

x = self.resnet.layer4(x)

att = self.attention(x) # adding self attention

x = x * att

x = self.resnet.avgpool(x)

x = torch.flatten(x, 1)

x = self.resnet.fc(x)

return x

- Training: Class-weighted CrossEntropyLoss, AdamW (lr=0.0005, weight_decay=9e-2), early stopping.

Results & Analysis

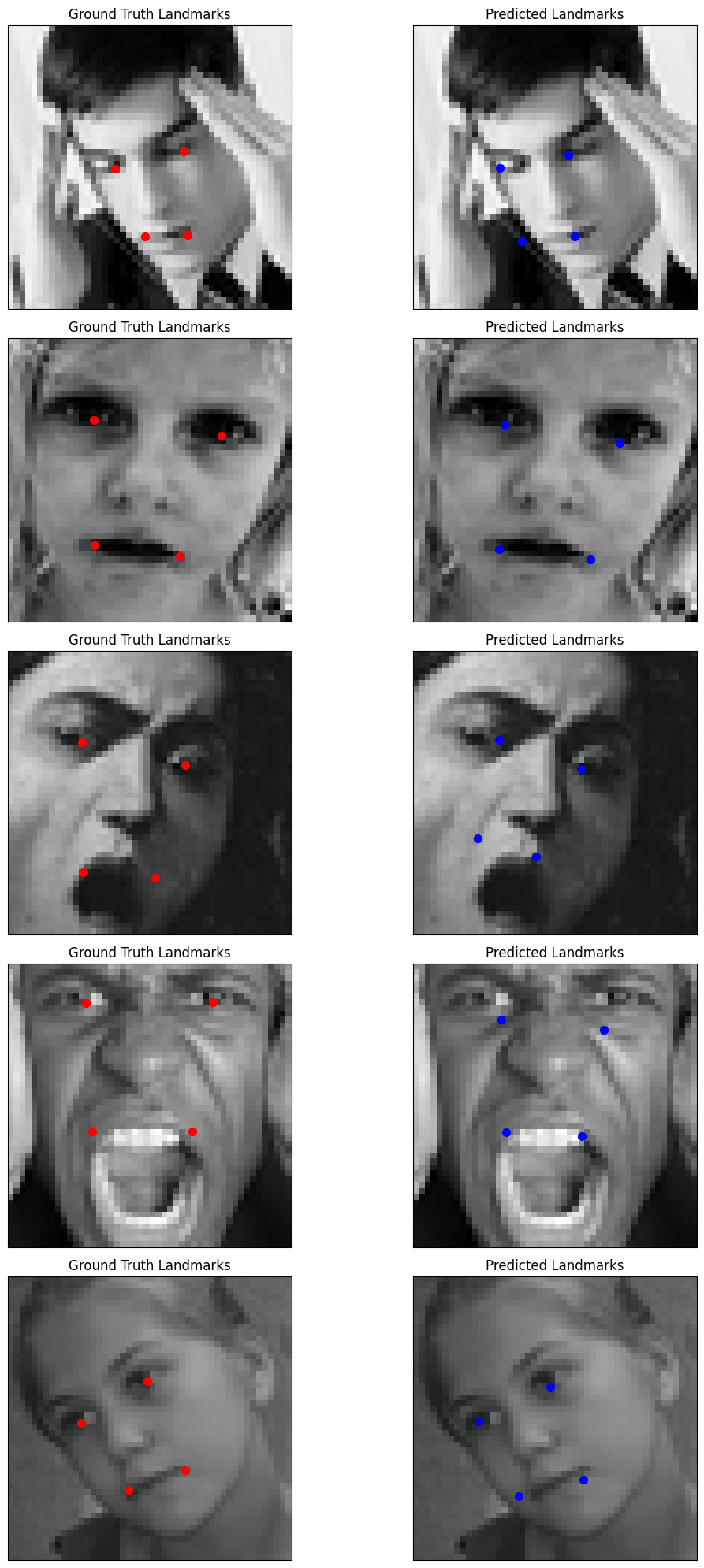

Landmark Detection Performance

| Landmark | Average Error (Euclidean Distance) |

|---|---|

| Left Eye Center | 0.0382 |

| Right Eye Center | 0.0398 |

| Left Lip Corner | 0.0471 |

| Right Lip Corner | 0.0433 |

- Average Error: 0.0386 pixels (normalized to image size)

The above visualization shows the predicted landmarks (blue) compared to the ground truth (red) on sample images. The model effectively captures key facial features, demonstrating its robustness against noise and low resolution.

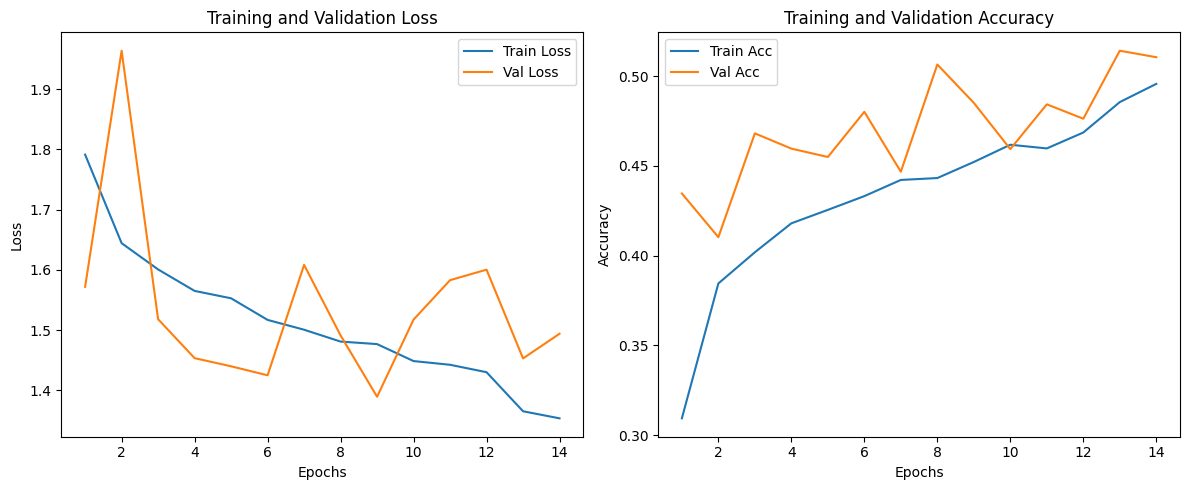

Emotion Classification

The diagnostic plots:

The emotion classification model achieved the following performance metrics on the FER2013 dataset:

| Emotion | Accuracy | Sample Count |

|---|---|---|

| Happy | 82.82% | 1,983 |

| Surprise | 74.28% | 831 |

| Neutral | 73.64% | 1,234 |

| Fear | 11.74% | 764 |

| Disgust | 38.18% | 123 |

| Overall | 52.38% | 3,589 |

Key findings:

- Attention Mechanism Impact: Self-attention improved accuracy by 4.2% over baseline ResNet18, proving the value of focusing on emotion-relevant facial regions

- Class Imbalance Reality: Model excelled on majority classes but struggled with minority emotions, reflecting real-world dataset challenges

- Data Quality Constraints: 18% face detection failure rate highlighted limitations when working with low-resolution facial data

- Architecture Choices: ResNet18 with attention struck a good balance between model complexity and performance for this constrained dataset

Future Work

- Address class imbalance using oversampling, undersampling, or synthetic data.

- Explore advanced methods like transfer learning, ensemble models, or Vision Transformers (ViTs) for improved feature extraction and accuracy.